Introduction to Bayesian Linear Models

03 - Priors

University of Edinburgh

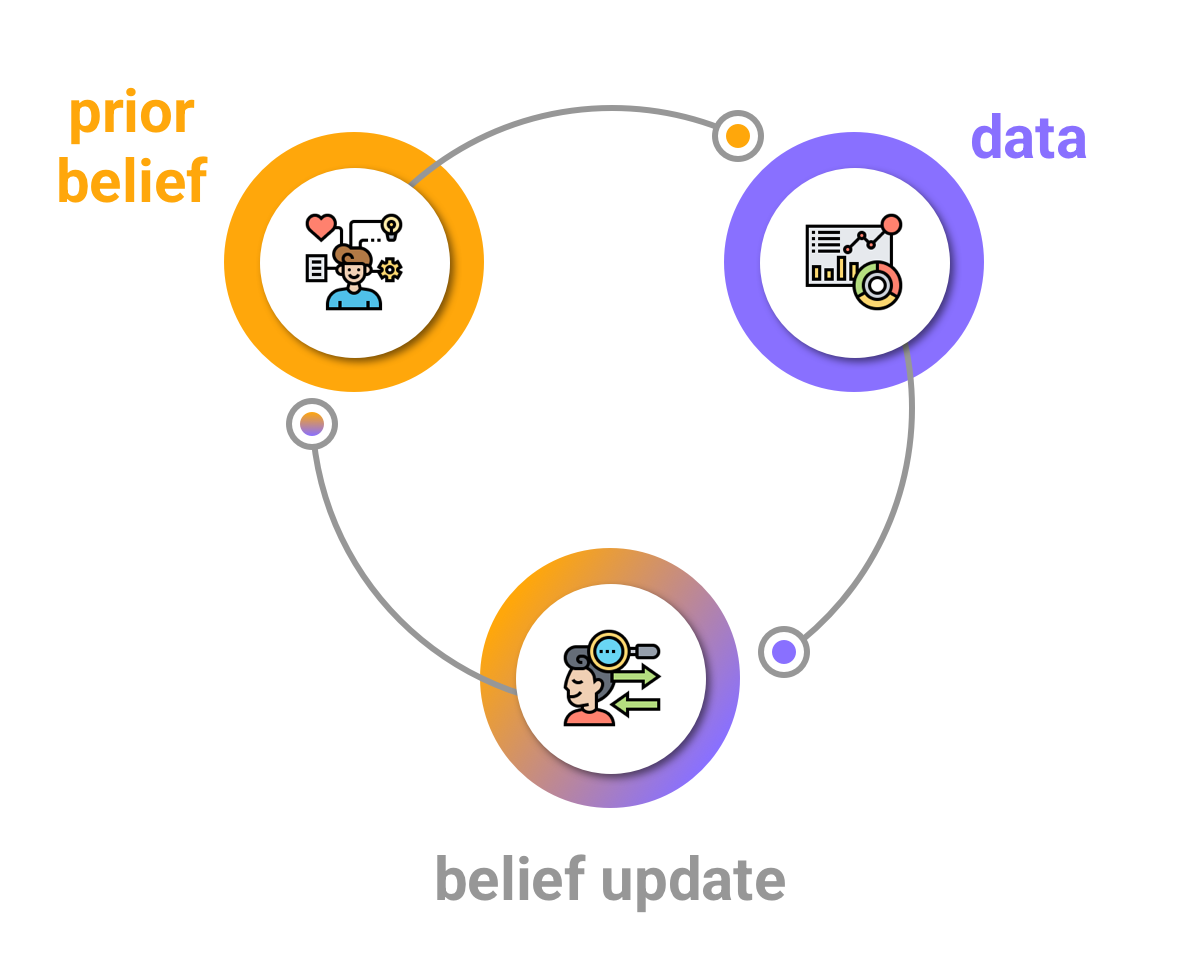

Bayesian belief update

Let’s start small

\[\text{RT}_i \sim Gaussian(\mu, \sigma)\]

\(\text{RT}_i\): Reaction Times

\(\sim\): distributed according to a

\(Gaussian()\): Gaussian distribution

with mean \(\mu\) and standard deviation \(\sigma\)

We assume RTs are distributed according to a Gaussian distribution (the assumption can be wrong)

…and in fact it is (RTs are not Gaussian, but we will get to that later).

The empirical rule

The empirical rule

The empirical rule

The empirical rule

The empirical rule

Distribution of RTs

\[\text{RT}_i \sim Gaussian(\mu, \sigma)\]

Pick a \(\mu\) and \(\sigma\).

Report them here: https://forms.gle/M7juHsxyv5Vbs7Gx7.

Let’s see what we got!

Distribution of RTs

\[\text{RT}_i \sim Gaussian(\mu, \sigma)\]

\[\mu = ...?\]

\[\sigma = ...?\]

When uncertain, use probabilities!

Priors of the parameters

\[ \begin{align} \text{RT}_i & \sim Gaussian(\mu, \sigma)\\ \mu & \sim Gaussian(\mu_1, \sigma_1)\\ \sigma & \sim Cauchy_{+}(0, \sigma_2)\\ \end{align} \]

Let’s pick \(\mu_1\) and \(\sigma_1\).

We can use the empirical rule.

Prior for \(\mu\)

\[ \begin{align} \text{RT}_i & \sim Gaussian(\mu, \sigma)\\ \mu & \sim Gaussian(\mu_1, \sigma_1)\\ \sigma & \sim Cauchy_{+}(0, \sigma_2)\\ \end{align} \]

Let’s say that the mean is between 500 and 2500 ms at 95% confidence.

Get \(\mu_1\)

mean(c(500, 2500))= 1500

Get \(\sigma_1\)

(2500 - 1500) / 2= 500

Prior for \(\mu\)

\[ \begin{align} \text{RT}_i & \sim Gaussian(\mu, \sigma)\\ \mu & \sim Gaussian(\mu_1 = 1500, \sigma_1 = 500)\\ \sigma & \sim Cauchy_{+}(0, \sigma_2)\\ \end{align} \]

Prior for \(\mu\): plot

Prior for \(\sigma\)

Prior for \(\sigma\): plot

Prior predictive checks: sample prior

Prior predictive checks: sample prior

Family: gaussian

Links: mu = identity; sigma = identity

Formula: RT ~ 1

Data: mald (Number of observations: 3000)

Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

total post-warmup draws = 4000

Regression Coefficients:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

Intercept 1505.95 489.24 536.86 2446.34 1.00 2753 2363

Further Distributional Parameters:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

sigma 270.58 5598.05 0.99 845.19 1.00 2921 2014

Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

and Tail_ESS are effective sample size measures, and Rhat is the potential

scale reduction factor on split chains (at convergence, Rhat = 1).Prior predictive checks: plot

Prior predictive checks: plot (zoom in)

Run the model

Model summary

Family: gaussian

Links: mu = identity; sigma = identity

Formula: RT ~ 1

Data: mald (Number of observations: 3000)

Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

total post-warmup draws = 4000

Regression Coefficients:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

Intercept 1046.60 6.34 1034.32 1058.94 1.00 3563 2804

Further Distributional Parameters:

Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

sigma 347.51 4.45 338.90 356.76 1.00 3678 2772

Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

and Tail_ESS are effective sample size measures, and Rhat is the potential

scale reduction factor on split chains (at convergence, Rhat = 1).Posterior predictive checks

Summary

Priors are probability distributions that convey prior knowledge about the model parameters.

Gaussian family

- \(\mu\): Gaussian prior.

- \(\sigma\): (Truncated) Cauchy prior (but also Student-t and others).

Use the empirical rule to work out Gaussian priors and the

HDIinterval::inverseCDF()function for other families.Prior predictive checks are fundamental and should be run during the study design, before data collection (or in any case without being informed by the data).